“Two LLMs walk into a graph… and the punchline is a podcast.”

Writing a captivating podcast script can feel like performing a live juggling act while riding a unicycle—fun and mildly terrifying. Luckily, Agentic AI frameworks like LangChain and LangGraph let us hand that unicycle to our favorite large‑language models (LLMs) and watch them perform the stunt for us.

In this post you’ll learn:

- What LangChain, LangGraph, and Agentic AI actually are (minus the buzzword bingo).

- How to wire up OpenAI GPT and Google Gemini into a tiny agentic pipeline.

- How the provided Python script discovers trending topics and spits out an engaging podcast script—while saving the markdown and an adorable Mermaid diagram.

- A few jokes along the way, because let’s be honest: robots writing podcasts is already comedy gold.

1 — Speed‑Date Introductions

| Concept | Elevator Pitch |

|---|---|

| LangChain | A modular toolbox that lets you glue LLMs, vector stores, tools, and prompts together faster than you can say “chain of thought.” |

| LangGraph | A graph‑oriented runtime built on LangChain. Nodes = functions, edges = flow control. Perfect when your logic looks more like a subway map than a straight line. |

| Agentic AI | Systems where LLMs don’t just respond—they act: decide, call tools, loop, and otherwise behave like caffeine‑fuelled interns who never sleep. |

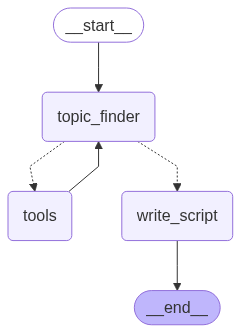

2 — The High‑Level Flow

- User: “Write me a podcast about the hottest Agentic AI topic.”

- Topic‑Finder Node: Asks GPT‑4o‑mini to suggest a topic.

- Router: If GPT needs external info, it triggers the do_search tool (powered by Google Serper).

- Writer Node: Gemini 2.0‑flash turns the final topic into a cozy, listener‑friendly script.

- Graph: Compiled by LangGraph, drawn as Mermaid, executed, and voilà—

script.mdlands in your folder.

Mermaid diagram generation and execution are handled automatically inside buildGraph().

3 — Walking Through the Code

3.1 — Imports & Environment Setup

What’s happening here? Before diving into graph logic, we need to gather all our cooking utensils—import LangGraph/LangChain classes, set environment variables, and pull in any helper libraries. Think mise en place, but for Python.

from typing_extensions import TypedDict from typing import Annotated from langgraph.graph import StateGraph, START, END from langgraph.graph.message import add_messages ...

These imports pull in LangGraph primitives, OpenAI and Gemini wrappers, and the Serper search utility. load_dotenv() sneaks your API keys in through environment variables.

3.2 — State Definition

Why do we need a state? Every graph needs a small suitcase to carry data from one node to the next. In LangGraph, that suitcase is a TypedDict/Pydantic you define yourself. In this example, we are using TypedDict.

class State(TypedDict):

messages: Annotated[list[AnyMessage], add_messages]

A minimalist graph state: just a list of LangChain messages that each node can append to.

3.3 — Tool: do_search

Tools in LangGraph/LangChain are self‑contained functions—mini‑APIs that extend an LLM’s superpowers. Need live data? Trigger a search tool. Need math? Call a calculator. They’re like the Swiss‑Army attachments on your AI pocket‑knife, and the agent decides when to flip each one open.

How does the LLM know which wrench to grab? Thanks to each tool’s description (and any JSON schema you attach), the model compares the user’s request against those definitions and decides whether calling a tool will improve the answer. In our graph, do_search only springs into action when GPT realizes it’s short on facts.

What’s this step for? LLMs are smart, but sometimes they need to “Google it” like the rest of us. This helper function lets agents call out to Serper for fresh facts.

def do_search(title: str):

""" Search for any details online for the given title

Args:

title: The title used for input to the search method, its a string value

"""

serper = GoogleSerperAPIWrapper()

return serper.run(title)

A single‑function “tool” that fetches‑and‑returns search results so GPT doesn’t hallucinate the population of Mars (again).

3.4 — Node: find_topic

Purpose in life? Choose the show’s headline. This node asks GPT‑4o‑mini for topic ideas, optionally runs do_search, and sends back either a list of possibilities or the final winner.

def find_topic(state:State):

title = state["messages"][-1]

prompt = ""

llm = ChatOpenAI(model="gpt-4o-mini")

result = ""

if title.name == None:

prompt = f"Search for '{title}' using the tool and in the response provide me the list of topics for this title. If you don't find any answer from the tool them simple respond 'don't know'"

llm_with_tools = llm.bind_tools([do_search])

result = llm_with_tools.invoke(prompt)

else:

prompt = f"'{title}'From this given trending topics, pick one title and provide me title alone on the response"

result = llm.invoke(prompt)

return {"messages":[result]}

Because sometimes GPT just needs to fact‑check itself.

3.5 — Node: topic_router

Why a router? Not every conversation needs a pit stop at the research tool. This tiny traffic‑cop looks at the latest message: if there’s a tool_call, it routes to the tools node, otherwise it speeds on to write_script—complete with humorous whistle noises in the logs.

Code snippet:

def topic_router(state:State):

last_message = state["messages"][-1]

if hasattr(last_message, "tool_calls") and last_message.tool_calls:

return "tools"

else:

return "write_script"

See?—small but mighty.

3.6 — Node: write_script

Big payoff time! This is where Gemini 2.0‑flash turns the chosen topic into a fully‑fledged podcast script and writes it to script.md.

def write_script(state:State):

message = state["messages"][-1]

prompt = f"""

Title: {title}

Write a short, engaging podcast script...

"""

gemini_client = ChatGoogleGenerativeAI(model="gemini-2.0-flash", api_key=google_api_key)

result = gemini_client.invoke(prompt)

# Save the script content to script.md

with open("script.md", "w", encoding="utf-8") as f:

f.write(f"# {title}\n\n")

f.write(result.content)

It then saves the output, because good content deserves a cozy markdown home.

3.7 — Graph Assembly & Execution

Bringing it all together. Now we wire up the subway map—connect nodes, define edges, compile, and press the big red Run button.

graph_builder.add_node("topic_finder", find_topic)

graph_builder.add_edge(START, "topic_finder")

graph_builder.add_node("tools", ToolNode(tools=[do_search]))

graph_builder.add_node("write_script", write_script)

graph_builder.add_conditional_edges( "topic_finder", topic_router, {"tools": "tools", "write_script": "write_script"})

graph_builder.add_edge("tools", "topic_finder")

graph_builder.add_edge("write_script", END)

graph = graph_builder.compile()

graph.invoke({

"messages": "Find the number 1 trending topic on Agentic AI as of today, then write a podcast script for it"

})

LangGraph handles the heavy lifting, renders a Mermaid PNG, and kicks off the flow—all in about as many lines.

When you run the script, you’ll find:

graph_image.png– visual candy for blog posts & slide decks.script.md– a ready‑to‑read (or ready‑to‑record) podcast script.

4 — Why This Matters

- Rapid ideation: Fresh topics fetched in real time.

- Multi‑LLM synergy: GPT for planning, Gemini for prose—like a buddy‑cop movie but with fewer explosions.

- Reusable pattern: Swap

write_scriptwithwrite_blog,compose_email, orgenerate_song_lyrics_about_tacos.

5 — Running & Extending

- Download the project from github

pip install -r requirements.txt– to install all dependencies.- Add

OPENAI_API_KEY,GOOGLE_API_KEY, andSERPER_API_KEYto your.env. - Run the script and marvel at your robo‑writer.

- Extend nodes or add new tools (text‑to‑speech? automatic tweet‑storms?) with just a few more edges.

6 — Wrap‑Up

Congratulations! You’ve just built a mini agentic pipeline that turns breaking‑news topics into polished podcast scripts faster than you can say “Hey Siri, start my podcast career.” The only thing left is to hit record—and maybe invest in a pop filter so your plosives don’t scare the cat.

Happy graph‑hacking, and may your LLMs never hallucinate your outro!

Written By

I’m an Enterprise Architect at Akamai Technologies with over 14 years of experience in mobile app development across iOS, Android, Flutter, and cross-platform frameworks. I’ve built and launched 45+ apps on the App Store and Play Store, working with technologies like AR/VR, OTT, and IoT.

My core strengths include solution architecture, backend integration, cloud computing, CDN, CI/CD, and mobile security, including Frida-based pentesting and vulnerability analysis.

In the AI/ML space, I’ve worked on recommendation systems, NLP, LLM fine-tuning, and RAG-based applications. I’m currently focused on Agentic AI frameworks like LangGraph, LangChain, MCP and multi-agent LLMs to automate tasks